This image delivers a production-ready AI Service environment built on Ubuntu 24.04 LTS and optimized for NVIDIA® GPU acceleration. It provides a secure, stable, and high-performance platform for AI inference, embeddings, and vector workloads on Azure.

Effortless installation. Ready-to-run with easy maintenance. Explore now for a smooth experience!

Connection to AI Service with GPU NVIDIA® Driver+CUDA® based on Ubuntu 24.04

- Please note the following information before purchasing: The GRID drivers redistributed by Azure don’t work on most non-NV series VMs like NC, NCv2, NCv3, ND, and NDv2-series VMs, but works on NCasT4v3 series. Learn more – Azure N-series GPU driver setup for Linux – Azure Virtual Machines | Microsoft Learn

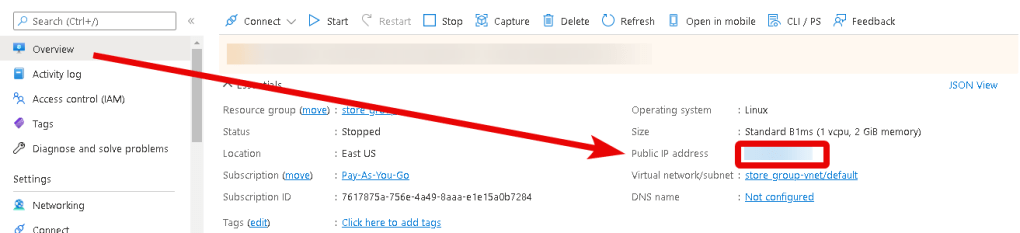

- After purchasing and starting the VM, you should know its IP address. You can find it in the personal account of Azure. You need to select the virtual machine from the list. By clicking the item “Overview”, your IP is displayed in the “Public IP Address” line.

- For proper operation, TCP port 8080 must be open. You can check this in the Network Settings of your VM

- To manage the server, you should connect to a VM.

- With OpenSSH

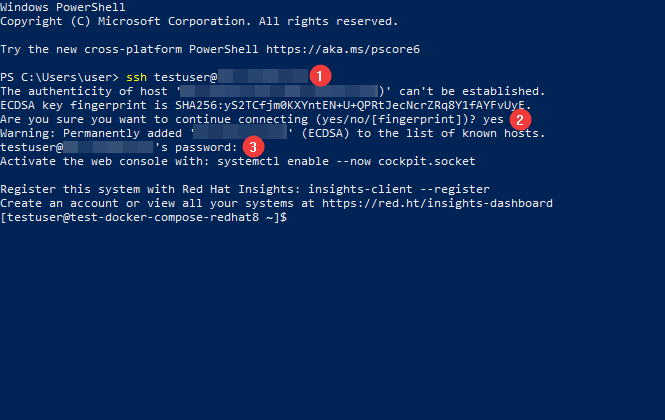

In the Windows operating system (starting with version 1809), an OpenSSH client is available, with which you can connect to Linux servers via SSH. To do this, launch a normal Windows command prompt and enter the command “ssh user@*vm_ip*” (1), where “user” is the username that was specified while creating the virtual machine and “*vm_ip*” is the VM IP address.

Then type “Yes” (2) and enter a password (3) that was specified while creating the virtual machine.

- With the PuTTY application

To do this, you need to connect via SSH to the PuTTY application. You can download it at the following link – Download

Run PuTTY, enter the VM address in the “Host” field (1), and click “Open” (2) to connect.

In the window that appears, click Accept.

In the opened console, you will need to enter a username (1) and a password (2) that were specified while the VM was being created (you’ll not see the password in the console while entering it).

- You can view the parameters of your virtual machine using the commands:

$nvidia-smi – see NVIDIA GPU driver

$nvcc -V – see toolkit CUDA

$ollama -v – see Ollama version

$ollama list – see the list of Ollama models

$sudo systemctl status openwebui – check openWebUI status

- By default, the llama3:latest model is installed. You can install other models with the command sudo ollama run “Model name”. For example: $sudo ollama run qwen3:30b. Find more models here – library

Warning! Add models only after steps 7–9. Adding models may require additional disk space (mounting a new disk).

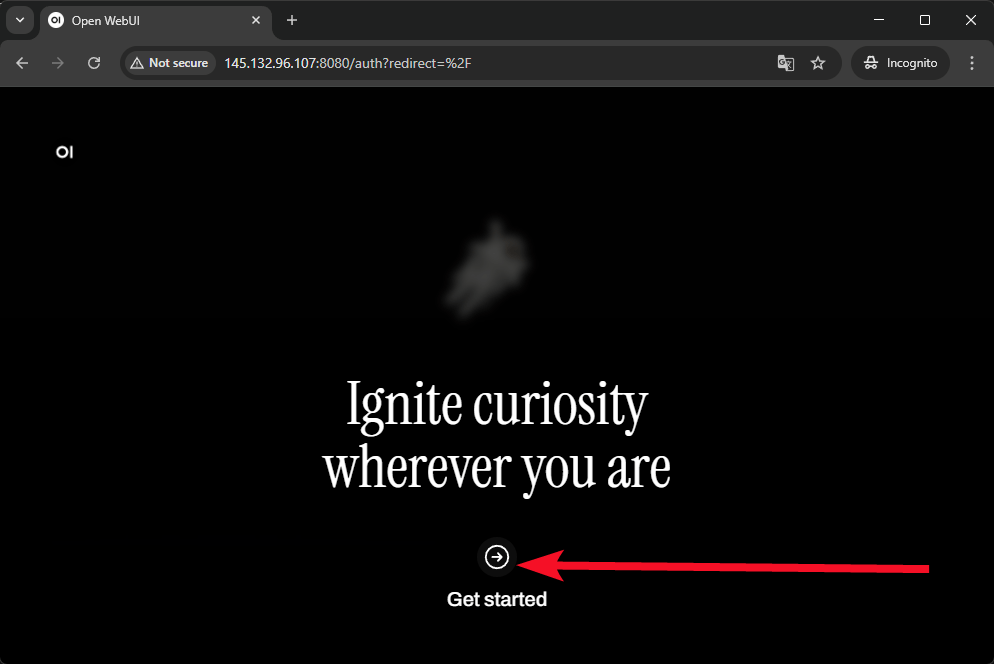

- To connect to AI WebUI, open your browser and enter http://your_VM_IP:8080

- Click Get started

- To begin, you will be asked to create an administrator account. Enter Name (1), Email (2), Password (3), and click Create admin account (4)

- The welcome window appears. You can close it.

- To see all models available for interaction, expand the list of models.

- Let’s start your conversation.

Now you can use our well-prepared AI Service with GPU NVIDIA® Driver+CUDA® based on Ubuntu 24.04.